Now that everybody has a Full HD display, you’ve probably formed your own opinions on the quality of SDTV content. In the early days, it was a bit of novelty to have your own broadcast-monitor experience, like being able to judge whether a news studio had upgraded to a digital tape format yet.

But SDTV will be with us for a long time; quite apart from the diversion that YouTube, cellphones and the scene have taken us on — something of a long-run historical blip — it’s inevitable that the standard “standard” still has another 20 years left in it. A large body of work is still being created in this format, so it’s not about to lose its replay value the way black & white did in the ’80s.

So we’ll be dealing with non-square pixels for some time to come. But I digress.

More than you might realise, our experience (and opinion) of SDTV is influenced hugely by the scaling process. The quality of the upscaling has often left a lot to be desired, even by broadcasters: All but one of the five national networks in Australia used only field-based rendering until 2007 … this meant that each field (1080i) or frame (720p) of HDTV broadcast had only the detail of 288 lines, or “288p”, half the SDTV potential 576 lines in a static image.

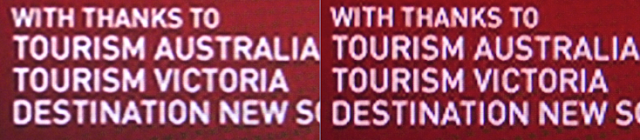

|

| The tell-tale signs of field-based scaling |

For several years, it was more likely that a $500 decoder box with HD outputs could do a better job than the networks. And by 2005, the last generation of 1376x768 “non-full” HDTVs could finally scale 480i or 576i properly, deriving the full detail from a static SD image. Full HD panels soon became a baseline feature, and the momentum was such that in 2006, it became de rigeur for HDTV displays to take a 1080i signal and derive a real 1080p picture with a static image. When you think about it, this was quite an achievement, with 3 gigabits per second being processed by the internal chipsets.

24 bit colour × 1920 × 1080 × 60fps == 2,985,984,000 bps

double again for "100Hz" / "120Hz" interpolation

But by 2010, after a lot of network upgrades, the situation finally turned. The networks were finally creating a nice image on their HD channels from their studio-quality SD sources (as the layman had always presumed); now the only thing unnecessarily limiting quality on all those Full HD displays was the SD channels — or, to be accurate, the way that SD was handled.

A few things have helped mislead viewers into believing that everything on the HD channels was “in HD”, or HD-native, because the end-product of the SD channels was very far from SDTV best practice. One thing was “small” screen sizes — anything under 60 inches makes it hard for an untrained eye to tell the providence of an HD end-product. Another thing not helping matters was that the SD channels were more aggressively starved of bitrate; yes that’s a factor, but a more important one has been the state of the art of consumer-side scaling.

So, what's “best practice” with SDTV, even if you can find channels with DVD-like bitrates?

True, field-based processing has long been history, but when you’re done deinterlacing at very high quality and are faced with a 720x480p60 or 720x576p50 sequence that requires display on a panel that has anything but those pixel dimensions, there are many ways to skin a cat. As people soon found, an “upscaling DVD player” with HDMI output often did a much better job at exactly the same thing that the TV itself was supposed to do. The SDTV channels as viewed on TiVo, Sky+HD, IQ2 looked better than the TV’s own tuner, or indeed other decoder boxes.

|

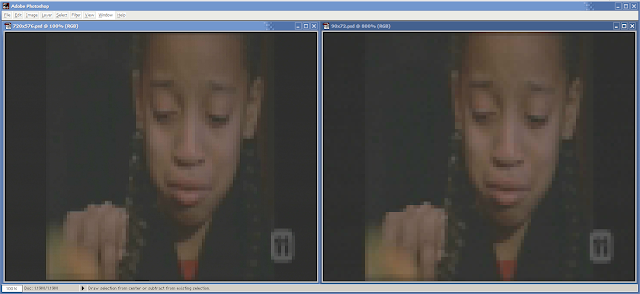

Both are examples of 576i upscaled to 1080i. Both are deriving frames via 576p.

On the left, TiVo. On the right, ‘another’ chipset, not utilising Faroudja technology :-) |

The example on the left is definitely what you want to live with day-to-day, unless you're lucky enough to live in Japan. You will have a very pleasant viewing experience on your Full HD panel if you apply this to studio-grade SD, and to SD sources derived from HD material — this is getting very common as cameras get upgraded more quickly than delivery mechanisms.

But we’re still left with a real-life problem: What about the macroblocks? In broadcast material, you’re often lucky to get as high as 720x480 or 720x576; in streaming technologies, the problem is even more common. You’re most likely to see this unavoidable drop in resolution on broadcast during a “quiet” scene if all hell is breaking loose on the other channels of the same transponder, and the broadcaster has an aggressive statmux’ing regime that rips the megabits out of the channel you’re watching: The i-frames will become very visible and blocky, at the start of every MPEG GOP as the video starts “pulsating” with every group, generally once per second.

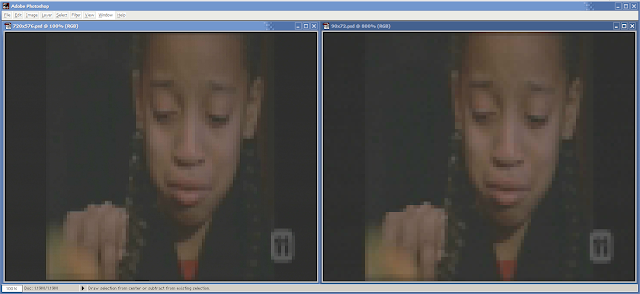

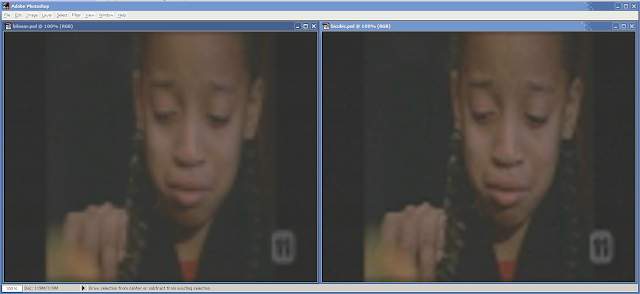

Here is one example of just such an occurrence, with the anamorphic 720x576 image @ 100% on the left. On the right, I've converted the image to 90x72, and blown it up to view @ 800%.

|

| Spot the difference :-( |

So, yes, for one frame we are watching the equivalent of a 72x72 postage-stamp sized video.

Hello, 1991 called — they want their QuickTime v1.0 video back.

Of course, with twenty years’ strides in technology, we should be able to do a better job than this.

The

decoder should be signalling an alert to that fancy 1080i scaler connected to the HDMI port.

Or, at least, if we’re not going to interfere with the 1920x1080 frame buffer, let’s at least do what we can with the 720x576 frame buffer — presumably we still have to manufacture not-very-integrated chipsets that have to process video sequentially in intermediate frame buffers like this?

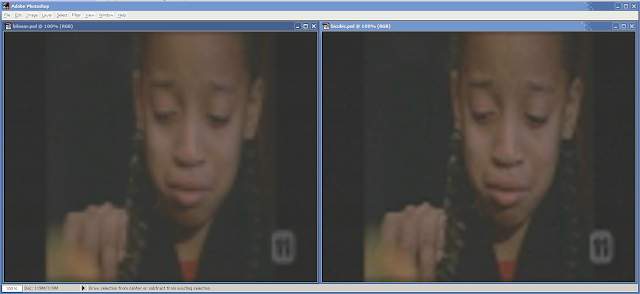

|

| Left: Bilinear upscaling. Right: Bicubic upscaling. |

The challenge here — the only challenge — is to identify how low to go before applying a sane upscale, not some ridiculous nearest-neighbour

copyblt lazy engineering. Of course, this information is already available during the MPEG decoding process, and, of course, it’s only really needed during the lightweight MPEG-2 and SDTV decoding that’s long been conquered — but shall be with us for decades yet. (In heavier scenarios, with MPEG-4 AVC deblocking and/or HDTV frames, this technique isn’t necessary).

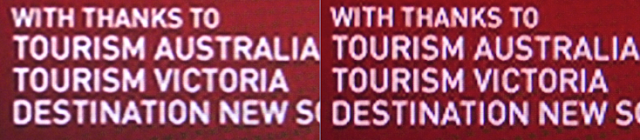

|

Left: Different frame, far less severe bitrate starvation. Right: Yet another frame, with ‘normal’ bitrate.

(note the quality recovery comes largely as a result of p-frames coping a lot better under harsh conditions) |

Compare it to some more “normal” frames, above, to see how successful it is to integrate scaling and decompression stages.

By the way, the video sample here has been through a fairly typical process for older American TV shows in PAL/DVB countries:

film 24fps ⇨ 480i 23.976pSF ⇨ 576i 25pSF ⇨ 540x576 ⇨ padded to 720x576 for 16:9

And following on that, you can get all the 1080i scaling mentioned further above.